Difference between revisions of "Jessi"

m (→Gallery) |

(→Competitions) |

||

| (36 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

{{Infobox IGVC | {{Infobox IGVC | ||

| robot_name = Jessi | | robot_name = Jessi | ||

| − | | image_path = | + | | image_path = Jessii.jpg |

| − | | image_alt_text = | + | | image_alt_text = Jessii alt text |

| year_start = 2017 | | year_start = 2017 | ||

| year_end = 2018 | | year_end = 2018 | ||

| Line 12: | Line 12: | ||

| highest_finish_autonav = n/a | | highest_finish_autonav = n/a | ||

| highest_finish_design = 1st | | highest_finish_design = 1st | ||

| + | | last_robot = Woodi | ||

}} | }} | ||

= Competitions = | = Competitions = | ||

=== IGVC 2018 === | === IGVC 2018 === | ||

| − | *Results | + | *Results: |

** Distance: 5 ft | ** Distance: 5 ft | ||

| − | ** Design Competition Placement: 2nd | + | ** Design Competition Placement: 2nd (401.33 / 480 points in finalist round; 1299/1400 points in group stages) |

| − | ** AutoNav Competition Placement: | + | ** AutoNav Competition Placement: 4th |

=== IGVC 2019 === | === IGVC 2019 === | ||

| − | *Results | + | *Results: |

** Distance: 16 ft | ** Distance: 16 ft | ||

** Design Competition Placement: 1st | ** Design Competition Placement: 1st | ||

| − | ** AutoNav Competition Placement: | + | ** AutoNav Competition Placement: 3rd |

| + | |||

| + | === IGVC 2021 === | ||

| + | *Results: | ||

| + | ** Distance: 47 ft | ||

| + | ** Design Competition Placement: 3rd | ||

| + | ** AutoNav Competition Placement: 3rd | ||

= Versions = | = Versions = | ||

| Line 31: | Line 38: | ||

== Jessi == | == Jessi == | ||

| − | + | === At Competition === | |

| + | |||

| + | |||

| + | === Mechanical Design & Issues === | ||

| − | |||

| − | + | === Electrical Design & Issues === | |

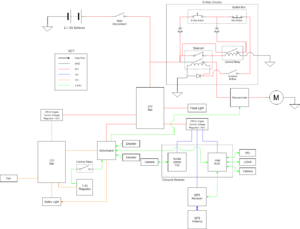

[[Image:IGVC Electrical Overview.png | 300px | thumb | Jessi Electrical Overview]] | [[Image:IGVC Electrical Overview.png | 300px | thumb | Jessi Electrical Overview]] | ||

| − | + | ||

| + | === Software Design & Issues === | ||

== Jessii == | == Jessii == | ||

| − | + | === At Competition === | |

Though the team was well-prepared going into 2019 IGVC, several issues came up during competition. On the first day, the mbed was experiencing issues and causing the computer to brownout. The mbed was fried while attempts were being made to fix the problem. Eventually this was solved with a new mbed and a large capacitor, which prevented brownouts from happening, though the whole process of fixing the robot took up the first day of the competition. During the second day, major progress was made; the team was 4th to qualify, which was the earliest Georgia Tech has ever qualified in IGVC. Additionally, the robot had a few successful runs on the course. Most notably, the great backwards run tragedy of 2019 happened. During this run, the robot turned itself around and went BACKWARDS on the course, though it went extremely far. This ended up being (absolute-value) the second longest run achieved by any robot in the competition this year, though it didn't count towards our score since it was backwards. | Though the team was well-prepared going into 2019 IGVC, several issues came up during competition. On the first day, the mbed was experiencing issues and causing the computer to brownout. The mbed was fried while attempts were being made to fix the problem. Eventually this was solved with a new mbed and a large capacitor, which prevented brownouts from happening, though the whole process of fixing the robot took up the first day of the competition. During the second day, major progress was made; the team was 4th to qualify, which was the earliest Georgia Tech has ever qualified in IGVC. Additionally, the robot had a few successful runs on the course. Most notably, the great backwards run tragedy of 2019 happened. During this run, the robot turned itself around and went BACKWARDS on the course, though it went extremely far. This ended up being (absolute-value) the second longest run achieved by any robot in the competition this year, though it didn't count towards our score since it was backwards. | ||

| − | |||

| − | ==== Electrical Design & | + | === Mechanical Design & Issues === |

| + | We tried to make Jessii a more modular and accessible version of Jessi. | ||

| + | |||

| + | |||

| + | === Electrical Design & Issues === | ||

For Jessii, the electrical team focused on iterating on several components of Jessi's system to improve weaknesses seen at the prior competition. | For Jessii, the electrical team focused on iterating on several components of Jessi's system to improve weaknesses seen at the prior competition. | ||

*Custom Computer and Improved Sensors | *Custom Computer and Improved Sensors | ||

**To improve computational capabilities and performance we upgraded our computer and other sensors | **To improve computational capabilities and performance we upgraded our computer and other sensors | ||

| − | ***Intel i7- | + | ***Intel i7-8700 3.2GHz 6 Core Processor, Nvidia GTX 1060 GPU, 32GB RAM |

****GPU was chosen for CUDA support | ****GPU was chosen for CUDA support | ||

****Hotswap capability was brought using a PCB though not implemented before competition | ****Hotswap capability was brought using a PCB though not implemented before competition | ||

| Line 63: | Line 76: | ||

**The logic board, the custom PCB which handles motor control switched from a virtual serial interface from MBed to a custom Ethernet communication protocol for robustness | **The logic board, the custom PCB which handles motor control switched from a virtual serial interface from MBed to a custom Ethernet communication protocol for robustness | ||

| − | + | === Software Design & Issues === | |

| − | + | ==== Design & Improvements ==== | |

| + | The software team performed a large overhaul of the codebase, focusing primarily on increasing the robustness of various algorithms used. | ||

| + | * Perception | ||

| + | ** We used the [https://velodynelidar.com/vlp-16.html VLP-16] 3D Lidar this year. We tried both RANSAC plane detection and Progressive Morphological Filters, but ended up using RANSAC for competition as it was more tested. | ||

| + | ** We switched from the FCN-8 architcture to the U-net architecture for the neural net for image segmentation. | ||

| + | * Localization | ||

| + | ** We used the same [https://github.com/cra-ros-pkg/robot_localization Robot Localization] package as last year for localization. | ||

| + | * Mapping | ||

| + | ** We kept with the same occupancy grid mapping strategy. However, we revamped the actual algorithm to be a lot more mathematically sound by using a binary bayes filter instead of simply incrementing a counter. In addition, we included a proper sensor model for our various sensors, so that knowledge of both free space and occupied space are used when mapping. | ||

| + | * Global Path Planning | ||

| + | ** The global path planning algorithm was changed from a janky A* implementation to a janky Field D* algorithm, which yeilded smoother paths. | ||

| + | * Local Path Planning | ||

| + | ** The local path planning algorithm still used the same smooth control law as before. However, instead of using a pure-pursuit style algorithm to follow the path, the robot paths directly to waypoints on the path and performs motion profiling, so that the robot is able to stay on the path. | ||

| + | ==== Issues ==== | ||

| + | We faced a few issues this year: | ||

| + | * Robustness of line detection | ||

| + | ** We moved from a pretrained FCN8 to a U-net architecture that didn't have pretraining. As a result, on the final day, our neural net had a decent amount of false negatives. | ||

| + | * Computational efficiency of global path planning | ||

| + | ** We used the Field D* for global path planning. However, it wasn't computationally efficient, and failed to find a path in a reasonable amount of time at the beginning of the run, when it saw lines that extended a fair distance in front. | ||

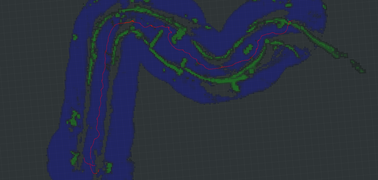

| + | * Because we were seeing lines in front and not behind us and the first waypoint was located to the side of the starting location, the global path planner planned a path that went behind us, leading to the famous reverse run. | ||

= Additional Information = | = Additional Information = | ||

| Line 74: | Line 106: | ||

<gallery mode='packed-hover'> | <gallery mode='packed-hover'> | ||

| − | Image:Jessi.jpg | Jessi | + | Image:jessiphotoshoot.jpeg | Jessi in real life |

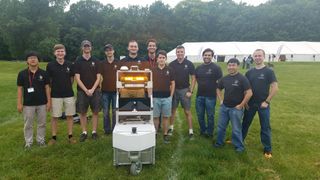

| + | Image:Jessiteambeforecomp.jpg | Jessi team before going to comp | ||

| + | Image:Jessiteamtesting.jpg | Jessi team testing during comp | ||

| + | Image:barrelking.jpeg | Barrel king. | ||

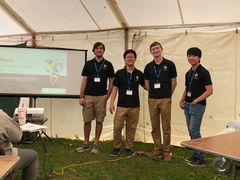

| + | Image:Jessidesignpresentation.jpg | Jessi team giving design presentation | ||

| + | Image:tomasblanket.jpg | Tomas sleeping on the grass | ||

| + | Image:Jessiteamduringcomp.jpg | Jessi team with the robot | ||

| + | Image:Jessiteamduringcomp2.jpg | Jessi team with the robot | ||

| + | Image:Woodishoutout.jpg | Woodi shoutout on Jessi | ||

| + | Image:Jessiteamwithaward.jpg | Jessi team with the 2nd place design award | ||

| + | Image:Jessi.jpg | Jessii CAD | ||

Image:Jessiibeforecomp.jpg | Jessii before going to competition | Image:Jessiibeforecomp.jpg | Jessii before going to competition | ||

Image:Jessiiteam1.jpg | Jessii team on day 1 of comp | Image:Jessiiteam1.jpg | Jessii team on day 1 of comp | ||

| Line 80: | Line 122: | ||

Image:Jessiiteam2.jpg | Jessii team after qualifying | Image:Jessiiteam2.jpg | Jessii team after qualifying | ||

Image:engineeringzipties.jpg | Zipties used to gain traction | Image:engineeringzipties.jpg | Zipties used to gain traction | ||

| + | Image:nighttesting.jpeg | Jessii testing during comp at night | ||

| + | Image:designcertificate.jpg | Jessii design 1st place certificate | ||

| + | Image:designaward.jpg | 1st place design plaque | ||

| + | Image:3rdplacecertificate.jpg | Jessii grand prize 3rd place certificate | ||

| + | Image:jessiifordtour.jpg | Jessii team on a Ford tour | ||

| + | Image:jessiidesignpresentation.jpg | Jessii team giving design presentation | ||

| + | Image:jessii-backwards-run.png | Jessii's route and map generated during the backwards run | ||

</gallery> | </gallery> | ||

| − | [[ | + | [[Category:IGVC]] |

| − | |||

Latest revision as of 19:55, 30 March 2022

| Jessi | |

|---|---|

| Year Of Creation | 2017-2018 |

| Versions | |

| Latest Revision | Jessii |

| Revision Years | 2018-2019 |

| Information and Statistics | |

| Farthest Distance | 16 ft |

| Fastest Time | n/a |

| Highest Finish AutoNav | n/a |

| Highest Finish Design | 1st |

| ← Woodi | |

Competitions

IGVC 2018

- Results:

- Distance: 5 ft

- Design Competition Placement: 2nd (401.33 / 480 points in finalist round; 1299/1400 points in group stages)

- AutoNav Competition Placement: 4th

IGVC 2019

- Results:

- Distance: 16 ft

- Design Competition Placement: 1st

- AutoNav Competition Placement: 3rd

IGVC 2021

- Results:

- Distance: 47 ft

- Design Competition Placement: 3rd

- AutoNav Competition Placement: 3rd

Versions

Jessi

At Competition

Mechanical Design & Issues

Electrical Design & Issues

Software Design & Issues

Jessii

At Competition

Though the team was well-prepared going into 2019 IGVC, several issues came up during competition. On the first day, the mbed was experiencing issues and causing the computer to brownout. The mbed was fried while attempts were being made to fix the problem. Eventually this was solved with a new mbed and a large capacitor, which prevented brownouts from happening, though the whole process of fixing the robot took up the first day of the competition. During the second day, major progress was made; the team was 4th to qualify, which was the earliest Georgia Tech has ever qualified in IGVC. Additionally, the robot had a few successful runs on the course. Most notably, the great backwards run tragedy of 2019 happened. During this run, the robot turned itself around and went BACKWARDS on the course, though it went extremely far. This ended up being (absolute-value) the second longest run achieved by any robot in the competition this year, though it didn't count towards our score since it was backwards.

Mechanical Design & Issues

We tried to make Jessii a more modular and accessible version of Jessi.

Electrical Design & Issues

For Jessii, the electrical team focused on iterating on several components of Jessi's system to improve weaknesses seen at the prior competition.

- Custom Computer and Improved Sensors

- To improve computational capabilities and performance we upgraded our computer and other sensors

- Intel i7-8700 3.2GHz 6 Core Processor, Nvidia GTX 1060 GPU, 32GB RAM

- GPU was chosen for CUDA support

- Hotswap capability was brought using a PCB though not implemented before competition

- Velodyne Puck VLP-16 3D LiDAR

- YostLabs 3-Space Micro USB IMU

- Intel i7-8700 3.2GHz 6 Core Processor, Nvidia GTX 1060 GPU, 32GB RAM

- To improve computational capabilities and performance we upgraded our computer and other sensors

- E-Stop

- E-Stop circuitry was consolidated onto E-Stop PCB

- Relay was used instead of a transistor used in version 1.0

- Ethernet Communication Protocol

- The logic board, the custom PCB which handles motor control switched from a virtual serial interface from MBed to a custom Ethernet communication protocol for robustness

Software Design & Issues

Design & Improvements

The software team performed a large overhaul of the codebase, focusing primarily on increasing the robustness of various algorithms used.

- Perception

- We used the VLP-16 3D Lidar this year. We tried both RANSAC plane detection and Progressive Morphological Filters, but ended up using RANSAC for competition as it was more tested.

- We switched from the FCN-8 architcture to the U-net architecture for the neural net for image segmentation.

- Localization

- We used the same Robot Localization package as last year for localization.

- Mapping

- We kept with the same occupancy grid mapping strategy. However, we revamped the actual algorithm to be a lot more mathematically sound by using a binary bayes filter instead of simply incrementing a counter. In addition, we included a proper sensor model for our various sensors, so that knowledge of both free space and occupied space are used when mapping.

- Global Path Planning

- The global path planning algorithm was changed from a janky A* implementation to a janky Field D* algorithm, which yeilded smoother paths.

- Local Path Planning

- The local path planning algorithm still used the same smooth control law as before. However, instead of using a pure-pursuit style algorithm to follow the path, the robot paths directly to waypoints on the path and performs motion profiling, so that the robot is able to stay on the path.

Issues

We faced a few issues this year:

- Robustness of line detection

- We moved from a pretrained FCN8 to a U-net architecture that didn't have pretraining. As a result, on the final day, our neural net had a decent amount of false negatives.

- Computational efficiency of global path planning

- We used the Field D* for global path planning. However, it wasn't computationally efficient, and failed to find a path in a reasonable amount of time at the beginning of the run, when it saw lines that extended a fair distance in front.

- Because we were seeing lines in front and not behind us and the first waypoint was located to the side of the starting location, the global path planner planned a path that went behind us, leading to the famous reverse run.